- Видео 487

- Просмотров 21 686 127

Steve Brunton

США

Добавлен 7 окт 2011

Neural Network Control in Collimator 2.0 & New Educational Videos!!!

Lots of exciting new developments in Collimator 2.0! The new neural network control block makes it easy and flexible to incorporate machine learning into advanced nonlinear control design.

Check out Collimator: collimator.ai

Jared Callaham has worked through several examples in this brilliant 4-part lecture series. Check them out here:

Part 1: ruclips.net/video/jAb-StdN-Cs/видео.html

Part 2: ruclips.net/video/fVUoF3uljfQ/видео.html

Part 3: ruclips.net/video/YkUh6lDfB-I/видео.html

Part 4: ruclips.net/video/PoZCjVTBihg/видео.html

Check out Collimator: collimator.ai

Jared Callaham has worked through several examples in this brilliant 4-part lecture series. Check them out here:

Part 1: ruclips.net/video/jAb-StdN-Cs/видео.html

Part 2: ruclips.net/video/fVUoF3uljfQ/видео.html

Part 3: ruclips.net/video/YkUh6lDfB-I/видео.html

Part 4: ruclips.net/video/PoZCjVTBihg/видео.html

Просмотров: 4 225

Видео

Residual Networks (ResNet) [Physics Informed Machine Learning]

Просмотров 30 тыс.День назад

This video discusses Residual Networks, one of the most popular machine learning architectures that has enabled considerably deeper neural networks through jump/skip connections. This architecture mimics many of the aspects of a numerical integrator. This video was produced at the University of Washington, and we acknowledge funding support from the Boeing Company %%% CHAPTERS %%% 00:00 Intro 0...

Neural ODEs (NODEs) [Physics Informed Machine Learning]

Просмотров 48 тыс.21 день назад

This video describes Neural ODEs, a powerful machine learning approach to learn ODEs from data. This video was produced at the University of Washington, and we acknowledge funding support from the Boeing Company %%% CHAPTERS %%% 00:00 Intro 02:09 Background: ResNet 05:05 From ResNet to ODE 07:59 ODE Essential Insight/ Why ODE outperforms ResNet // 09:05 ODE Essential Insight Rephrase 1 // 09:54...

Physics Informed Neural Networks (PINNs) [Physics Informed Machine Learning]

Просмотров 41 тыс.Месяц назад

This video introduces PINNs, or Physics Informed Neural Networks. PINNs are a simple modification of a neural network that adds a PDE in the loss function to promote solutions that satisfy known physics. For example, if we wish to model a fluid flow field and we know it is incompressible, we can add the divergence of the field in the loss function to drive it towards zero. This approach relies ...

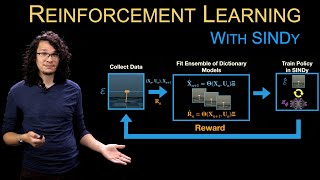

SINDy-RL: Interpretable and Efficient Model-Based Reinforcement Learning

Просмотров 15 тыс.Месяц назад

SINDy-RL: Interpretable and Efficient Model-Based Reinforcement Learning by Nicholas Zolman, Urban Fasel, J. Nathan Kutz, Steven L. Brunton arxiv paper: arxiv.org/abs/2403.09110 github code: github.com/nzolman/sindy-rl Deep reinforcement learning (DRL) has shown significant promise for uncovering sophisticated control policies that interact in environments with complicated dynamics, such as sta...

AI/ML+Physics: Preview of Upcoming Modules and Bootcamps [Physics Informed Machine Learning]

Просмотров 16 тыс.Месяц назад

This video provides a brief preview of the upcoming modules and bootcamps in this series on Physics Informed Machine Learning. Topics include: (1) Parsimonious modeling and SINDy; (2) Physics informed neural networks (PINNs); (3) Operator methods, like DeepONets and Fourier Neural Operators; (4) Symmetries in physics and machine learning; (5) Digital Twin technology; and (6) Case studies in eng...

AI/ML+Physics: Recap and Summary [Physics Informed Machine Learning]

Просмотров 15 тыс.2 месяца назад

This video provides a brief recap of this introductory series on Physics Informed Machine Learning. We revisit the five stages of machine learning, and how physics may be incorporated into these stages. We also discuss architectures, symmetries, the digital twin, applications in engineering, and the importance of dynamical systems and controls benchmarks. This video was produced at the Universi...

Using sparse trajectory data to find Lagrangian Coherent Structures (LCS) in fluid flows

Просмотров 8 тыс.2 месяца назад

Video by Tanner Harms, based on "Lagrangian Gradient Regression for the Detection of Coherent Structures from Sparse Trajectory Data" by Tanner D. Harms, Steven L. Brunton, Beverley J. McKeon arxiv.org/abs/2310.10994 The method of Lagrangian Coherent Structures (LCS) uses particle trajectories in fluid flows to identify coherent structures that govern the behavior of the flow. The typical metho...

AI/ML+Physics Part 5: Employing an Optimization Algorithm [Physics Informed Machine Learning]

Просмотров 14 тыс.2 месяца назад

This video discusses the fifth stage of the machine learning process: (5) selecting and implementing an optimization algorithm to train the model. There are opportunities to incorporate physics into this stage of the process, such as using constrained optimization to force a model onto a susbpace or submanifold characterized by a symmetry or other physical constraint. This video was produced at...

The Future of Model Based Engineering: Collimator 2.0

Просмотров 18 тыс.2 месяца назад

Learn more at www.collimator.ai/ Collimator allows you to model, simulate, optimize, control, and collaborate in the cloud, with the power of Python and JAX New features: * Powered by JAX * Generative AI * Auto-Differentiation * PID Auto-Tune * SINDy model blocks * Model Predictive Control * Real-Time Collaboration * Hardware in the Loop * Hybrid models * State machines * FMU support * Updated ...

AI/ML+Physics Part 4: Crafting a Loss Function [Physics Informed Machine Learning]

Просмотров 27 тыс.3 месяца назад

This video discusses the fourth stage of the machine learning process: (4) designing a loss function to assess the performance of the model. There are opportunities to incorporate physics into this stage of the process, such as adding regularization terms to promote sparsity or extra loss functions to ensure that a partial differential equation is satisfied, as in PINNs. This video was produced...

AI/ML+Physics Part 3: Designing an Architecture [Physics Informed Machine Learning]

Просмотров 34 тыс.3 месяца назад

This video discusses the third stage of the machine learning process: (3) choosing an architecture with which to represent the model. This is one of the most exciting stages, including all of the new architectures, such as UNets, ResNets, SINDy, PINNs, Operator networks, and many more. There are opportunities to incorporate physics into this stage of the process, such as incorporating known sym...

AI/ML+Physics Part 2: Curating Training Data [Physics Informed Machine Learning]

Просмотров 27 тыс.3 месяца назад

This video discusses the second stage of the machine learning process: (2) collecting and curating training data to inform the model. There are opportunities to incorporate physics into this stage of the process, such as data augmentation to incorporate known symmetries. This video was produced at the University of Washington, and we acknowledge funding support from the Boeing Company %%% CHAPT...

AI/ML+Physics Part 1: Choosing what to model [Physics Informed Machine Learning]

Просмотров 68 тыс.4 месяца назад

This video discusses the first stage of the machine learning process: (1) formulating a problem to model. There are lots of opportunities to incorporate physics into this process, and learn new physics by applying ML to the right problem. This video was produced at the University of Washington, and we acknowledge funding support from the Boeing Company %%% CHAPTERS %%% 00:00 Intro 04:51 Decidin...

Physics Informed Machine Learning: High Level Overview of AI and ML in Science and Engineering

Просмотров 208 тыс.4 месяца назад

Physics Informed Machine Learning: High Level Overview of AI and ML in Science and Engineering

Can we make commercial aircraft faster? Mitigating transonic buffet with porous trailing edges

Просмотров 9 тыс.5 месяцев назад

Can we make commercial aircraft faster? Mitigating transonic buffet with porous trailing edges

Supervised & Unsupervised Machine Learning

Просмотров 23 тыс.5 месяцев назад

Supervised & Unsupervised Machine Learning

A Machine Learning Primer: How to Build an ML Model

Просмотров 43 тыс.5 месяцев назад

A Machine Learning Primer: How to Build an ML Model

Arousal as a universal embedding for spatiotemporal brain dynamics

Просмотров 25 тыс.6 месяцев назад

Arousal as a universal embedding for spatiotemporal brain dynamics

New Advances in Artificial Intelligence and Machine Learning

Просмотров 72 тыс.6 месяцев назад

New Advances in Artificial Intelligence and Machine Learning

Nonlinear parametric models of viscoelastic fluid flows with SINDy

Просмотров 6 тыс.6 месяцев назад

Nonlinear parametric models of viscoelastic fluid flows with SINDy

[5/8] Control for Societal-Scale Challenges: Road Map 2030 [Technology, Validation, and Transition]

Просмотров 10 тыс.11 месяцев назад

[5/8] Control for Societal-Scale Challenges: Road Map 2030 [Technology, Validation, and Transition]

[8/8] Control for Societal-Scale Challenges: Road Map 2030 [Recommendations]

Просмотров 6 тыс.11 месяцев назад

[8/8] Control for Societal-Scale Challenges: Road Map 2030 [Recommendations]

[2/8] Control for Societal-Scale Challenges: Road Map 2030 [Societal Drivers]

Просмотров 7 тыс.11 месяцев назад

[2/8] Control for Societal-Scale Challenges: Road Map 2030 [Societal Drivers]

[1/8] Control for Societal-Scale Challenges: Road Map 2030 [Introduction]

Просмотров 15 тыс.11 месяцев назад

[1/8] Control for Societal-Scale Challenges: Road Map 2030 [Introduction]

[6/8] Control for Societal-Scale Challenges: Road Map 2030 [Education]

Просмотров 3,4 тыс.11 месяцев назад

[6/8] Control for Societal-Scale Challenges: Road Map 2030 [Education]

[3/8] Control for Societal-Scale Challenges: Road Map 2030 [Technological Trends]

Просмотров 5 тыс.11 месяцев назад

[3/8] Control for Societal-Scale Challenges: Road Map 2030 [Technological Trends]

[7/8] Control for Societal-Scale Challenges: Road Map 2030 [Ethics, Fairness, & Regulatory Issues]

Просмотров 2,1 тыс.11 месяцев назад

[7/8] Control for Societal-Scale Challenges: Road Map 2030 [Ethics, Fairness, & Regulatory Issues]

[4/8] Control for Societal-Scale Challenges: Road Map 2030 [Emerging Methodologies]

Просмотров 4,3 тыс.11 месяцев назад

[4/8] Control for Societal-Scale Challenges: Road Map 2030 [Emerging Methodologies]

![Residual Networks (ResNet) [Physics Informed Machine Learning]](http://i.ytimg.com/vi/w1UsKanMatM/mqdefault.jpg)

![Residual Networks (ResNet) [Physics Informed Machine Learning]](/img/tr.png)

![Neural ODEs (NODEs) [Physics Informed Machine Learning]](http://i.ytimg.com/vi/nJphsM4obOk/mqdefault.jpg)

![Physics Informed Neural Networks (PINNs) [Physics Informed Machine Learning]](http://i.ytimg.com/vi/-zrY7P2dVC4/mqdefault.jpg)

![AI/ML+Physics: Preview of Upcoming Modules and Bootcamps [Physics Informed Machine Learning]](http://i.ytimg.com/vi/_ObvDgPMWkU/mqdefault.jpg)

![AI/ML+Physics: Recap and Summary [Physics Informed Machine Learning]](http://i.ytimg.com/vi/GCz6afDVy5Y/mqdefault.jpg)

![AI/ML+Physics Part 5: Employing an Optimization Algorithm [Physics Informed Machine Learning]](/img/1.gif)

Thanks a lot for sharing us how things work and the updates of our time

are you writing on a glass board?

It seems to me that lecturer we see is the image of him in the 'mirror'

thank's 4 all u'r time & effort! best regards Steve!

Very ineffecient… stick to quantum… Virtual photon replication is the future. Swinging heavy electrons drains the powergrid.

What are you talking about

I'm reminded of Prof Tadashi Tokieda's "kendama" toy video - would make a fun example. ruclips.net/video/TkGawXjsltc/видео.html

very cool!

nice now try to balance a double pendulum

I thought he meant Prime from SSB 😛💕

Man I miss this bring it back 👄😻👅

What is deflection

What is deflection

The 🐐 PERIOD!

Played the intro a couple of times - Nice segue!

Why is it implicit that x(k+1)=x(k)+f(x) is Euler integration ? Can be any integrator depending on how you build f(x), Runge Kutta for example f is f(x) =h/6*(k1+2*k2+2*k3+k4).

Lifesaver

Will there be problem if the real system is chaotic?

Many thanks for shearing...

Is not supposed to be i + j + k at 10:48 of the video? What I missing?

I am a video editor. If you need any help related to video editing you can contact me. I will share my portfolio

That's weird, the loss function you said in the video which is different from your paper

Laplace transform and inverse Laplace transform are just Linear Transform forth and back. The same as Fourier transform, they are essentially Linear Transformations.

I cant download or open tem PDF book. Someone are having the same problem?

Wow i just relearned SVD in 13m whereas if took me hours in undergrad.

at 44:00 I think this inequality can be deduced using Taylor series expansion up to 2 terms for cosine

minute 41:00 I think the trick is |R-a| = sqrt((R-a)^2) wich leads to sqrt(R^2 - 2aR + a^2) If theta = pi, the inequality becomes equal, so true, but if theta is different from pi, the term 2aR cos(theta) < 2aR which is also true. Then you're right

What about periodic functions? Is there a way to get nice approximations with neural networks?

I'm highly critical of so-called RUclips "educators." I just watched several on SVD from MIT and Stanford, all of which were garbage. But this... this is art in its purest form. You are a scholar among scholars! Absolutely beautiful to watch unfold. Thank you!!!

Brunton is unparalleled until others do the clear board as well. re MIT tho..Strang is no joke, don't sleep on him. abstruse but distilled well, passionate dude yet elderly linear algebra notable

Hello! Do you have the example of double mass, spring and damper system?

As a Chemical Engineer that studied CFD in grad school turned Data Scientist, I absolutely love this and the fact that there is active research in the intersection of physics and AI.

same as mechanical engineer work 3 year cfd engineer currently working on the ai robotics engineer

what id really mass energy and momentum created then how will gauss divergence theorem work?

This is awesome! I am so stocked about how they have found a 15D linear system with integer sparse off-diagonal matrix approximating Lorenz attractor with such an accuracy. It is a pure joy contemplating this mathematical elegance

It's not just a log function that has levels. The numbers themselves have levels. exp(i t) = exp(i (t + 2 pi k)) for all integer k It seems to me that any complex valued function also has levels. Why is the Log function singled out as special? Is it because it has a singularity?

epic lighting. subscribed.

Your words of choice are easy to understand and make the lecture sounds fun! Thankyou! Will sit for my final this 29june pray for me🙏

9:21 so you change the lower bound to 0 because the lowest value we can obtain from solving the integral is now 0, rather than negative infinity, since you've defined it to be that way using H(t)? Is that about right?

You outdid yourself as usual

Amazing, Than you

Amazing, Thank you

2024, still the best.

Fantastic video! Do you have any references for the mathematics behind the continuous adjoint method?

STEVE I ABSOLUTLY LOVE HOW SHARP AND CLEAN AND PACKED FULL OF INFO YET STILL DIGESTIBLE EVERYTHING IS!! You are my favorite teacher. I am a self learner and you are GOD MODE for that. PS: please do a video on why all power series are Taylor series (without borel heavy machinery if possible or by using borel but breaking everything down)!

Why is EVERY power series a Taylor series (without having to use heavy analysis stuff I don’t understand)!?

You know that it is going to be a great lecture series when Eigensteve is teaching you about eigenvalues and eigenvectors

tbh understanding his videos is very difficult, IMO he explains badly. Like 14:14 is the first more complicated part and I don't really get what it is about. I wouldn't understand ResNet from his explanation either if I had no prior knowledge about it. He just assumes that I am some expert in math and DLs

suposse we have a discrete time signal x[n]=exp(jwn) and it is periodic with N. then exp(jw(n+N))=exp(jwn) thus exp(jwN)=1. because 1=exp(2(pi)k) where k is an integer, equation w=2(pi)k/N must hold. if N is chosen to be pi ,which is not an integer, x[n] is not periodic. consequently, has infinitely many unique values. In addition, for x[n] to be periodic, w must be some multiple of pi (true when k and N are integers).

I'm so much very grateful for these videos you make. Keep on the good work.

This is so technically correct, and simultaneously so obtuse, that my intuition fuse has melted. Please consider redoing this as 3D pseudo visualizations of data subsets.

tim cook?

How can sigma be invertible when it's a rectangular matrix?

This seems like you are changing your loss function not your network. Like there is some underlying field you are trying to approximate and you're not commenting on the structure of the network for that function. You are only concerning yourself with how you are evaluating that function (integrating) to compare to reality. I think it's more correct to call these ODE Loss Functions, Euler Loss Functions, or Lagrange Loss Functions for neural network evaluation.